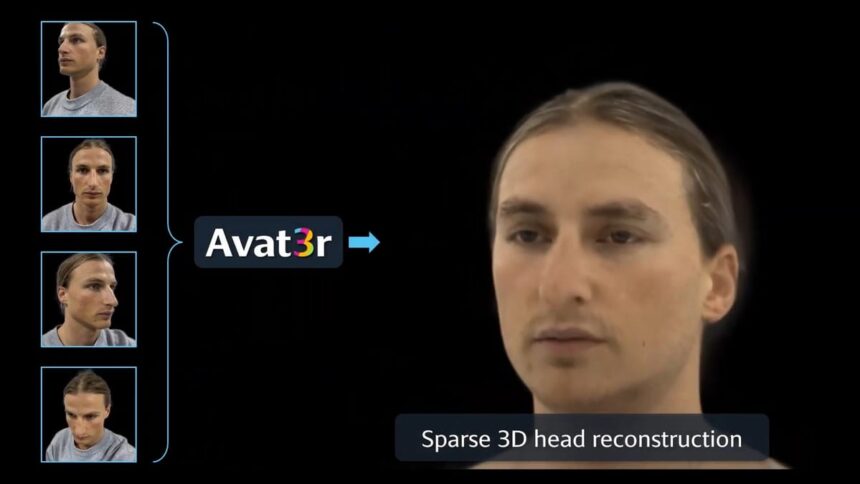

Meta researchers constructed a “massive reconstruction mannequin (LRM)” that may generate an animatable photorealistic avatar head in minutes from simply 4 selfies.

Meta has been researching photorealistic avatar technology and animation for greater than six years now, and its highest-quality model even crosses the uncanny valley, in our expertise.

One of many greatest challenges for photorealistic avatars up to now has been the quantity of information and time wanted to generate them. Meta’s highest-quality system requires a really costly specialised seize rig with over 100 cameras. The corporate has proven analysis on producing decrease high quality avatars with a smartphone scan, however this required making 65 facial expressions over the course of greater than three minutes, and the information captured took a number of hours to course of on a machine with 4 excessive finish GPUs.

Now, in a brand new paper referred to as Avat3r, researchers from Meta and Technical College of Munich are presenting a system that may generate an animatable photorealistic avatar head from simply 4 cellphone selfies, and the processing takes a matter of minutes, not hours.

On a technical stage, Avat3r builds on the idea of a giant reconstruction mannequin (LRM), leveraging a transformer for 3D visible duties in the identical sense that giant language fashions (LLMs) do for pure language. That is usually referred to as a imaginative and prescient transformer, or ViT. This imaginative and prescient transformer is used to foretell a set of 3D Gaussians, akin to the Gaussian splatting you will have heard about within the context of photorealistic scenes equivalent to Varjo Teleport, Meta’s Horizon Hyperscapes, Gracia, and Niantic’s Scaniverse.

The particular implementation of Avat3r’s animation system is not pushed by a VR headset’s face and eye monitoring sensors, however there isn’t any motive it could not be tailored to leverage this because the enter.

Nonetheless, whereas Avat3r’s knowledge and compute necessities for technology are remarkably low, it is nowhere close to appropriate for real-time rendering. Based on the researchers, the tip end result system runs at simply 8 FPS on an RTX 3090. Nonetheless, in AI it’s normal to see subsequent iterations of recent concepts obtain orders of magnitude optimizations, and Avat3r’s strategy exhibits a promising path to sooner or later, ultimately, letting headset house owners arrange a photorealistic avatar with a couple of selfies and minutes of technology time.